If you attended my DDD East Anglia talk “A Raspberry Pi2, Azure ML and Project Oxford to unlock that door!” where I integrate a Raspberry Pi running Windows 10 IoT core with the service bus , Project Oxford for face recognition and a Windows Store App to take my picture and hopefully unlock my door. Yes I did bring a door with me. Thanks for attending and for your nice comments.

I have started to put my code up on GitHub. The code for the Raspberry Pi is already there - https://github.com/sdspencer-mvp/RaspberryPi2-UnlockTheDoor. More will appear later as I tidy it up and remove all my config secrets

I will be repeating this talk at Smart Devs in Hereford on 12 October 2015 and again at DDD North in Sunderland on 24 October 2015.

Following on from my last post where I introduce Project Oxford I’ve done a bit more work to take the project that was built and make it more visual. To summarise, Project Oxford is a set of APIs that build on top of Azure ML to provide Face, Speech, Computer Vision and Language Understanding Intelligence Service (LUIS). There was a good video from Build 2015 that I watched to provide an overview of each of the APIs.

I used the tutorials to build an application that would identify a number of people from a known list in a photograph and highlight the ones that were unknown. The Face API requires people to be trained with a set of photos first, before identification can be made. This was done by using the code in the samples. I created a folder for each person that I wanted to be trained and added different photos of each person with and without hats, and sunglasses and also with different expressions. Then each set of folders was passed to the training API. Once trained you can then use the rest of the Face API to firstly identify faces in a picture and then take each face that is found and see if they are known.

One useful tip I’ve found is to have Fiddler running whilst you are debugging as it is far easier to see any errors in the body of the response message than in the exceptions that are thrown. Details of the errors can be seen in the Face API documentation.

The process for training is as follows (Note the terminology is based around the SDK methods, but I’ve linked to the API page as this gives details about the errors etc):

- Create a Person Group

- Create a Face list for each person using Face Detect

- Create a Person one for each person you want to identify with the person group id and face list

- Train the Person Group

Note: The training does not last forever and you will need to redo it periodically. If you try and detect a person when training has expired then you will get an error response saying that the person group is unknown.

To Identify each individual in a photograph:

- Stream the photograph into Detect. This will return a list of faces with face ids

- Iterate around each Face and call Identify

- Use the Identify Results to extract the names by calling Get Person.

This is where I got to with the previous post, but this wasn’t very visual and as I was working with photographs I thought it would be useful to use the data returned to draw a box around the faces that were identified and add the name of the person underneath. This was also useful to know which person was identified incorrectly. On the project Oxford web site there was the following image

I wanted to emulate this and also to take it one step further. The data returned from the face detection API provides details about gender, age, the area (face rectangle) in the picture where the face was found, face landmarks, and head pose. What the detection API did not do was to tie the name of the person to the face. We do already have this information as it was returned from the Identify API and Get Person. The attribute that links them is the face id. Using the results of the Identify API I called get person for each face identified to return the person’s name and stored this in a Dictionary along with the face ID. This then allowed me to load the original photograph into memory draw the rectangles for each face and add the text below each using the face id to extract the rectangle and match the name from the Dictionary, This could then be scaled shown in the app.

I’ve wanted to use Azure Machine Learning for a while but didn’t know where to start. Microsoft have released some gallery applications for Azure ML to take away some of the complexity and make it easy for developers to use the service. One item in the gallery that will be useful is Project Oxford. Project Oxford offers a number of features and the one I am going to talk about here is the Face API.

With the Face API you can train Azure ML with pictures of a number of people and then use the matching api to see whether any of the trained people appear in the image.

This is easy to setup and there is a good tutorial here: http://www.projectoxford.ai/doc/face/How-To/identifyperson

Firstly you will need to sign up and get a subscription key http://www.projectoxford.ai/doc/general/subscription-key-mgmt

Login to Azure portal with an Azure subscription, The link should open market place. Scroll down to find Face APIs and then click through to the purchase button and purchase. This api is currently free.

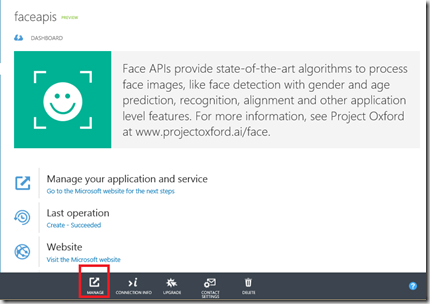

Your face api service will now be created. Once complete you need to extract the keys for use in your app. Click on your face api service then click the Manage Button

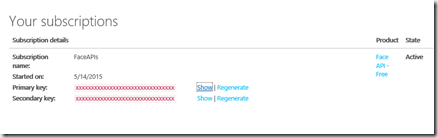

Click on show to view your key and copy it into your application

Download the face api from https://www.projectoxford.ai/sdk unzip and add to your project, then add a reference in your application.

Follow the code here: http://www.projectoxford.ai/doc/face/How-To/identifyperson

Be aware that when this is run you may get a bad request error (I used fiddler to see the error) when creating a Person Group. This seems to be due to case sensitivity and when I made the parameters lower case it worked! The sample code above is mixed case but the service seems to want all lowercase. Details of the error messages can be found here: https://dev.projectoxford.ai/docs/services/54d85c1d5eefd00dc474a0ef/operations/54f0387249c3f70a50e79b84 The body of the response contains the exact details of the error.

There are limitations on file size so I ended up editing mine down to below 4MB

Once trained you can detect multiple people in one photo graph and will identify those that it knows

I've trained it with a number of people especially as my daughter was identified as her mum :-)

Now I've added her into the training files she is not mistaken.

You might need to play around with the training files especially to take into account hats and glasses.

Enjoy